One of the OpenStack projects that seem to get less attention than others such as Nova and Neutron is the Cinder block storage service project. I though it may be helpful to others if I wrote up some things I’ve learned about Cinder. I’ll start, in this post, by walking through the basics of Cinder; then following on my recent series on VMware vSphere with OpenStack Nova Compute, I wrote a post on how vSphere uniquely integrates with this block storage service. First, let’s put Cinder in proper context by taking a look at the available storage options in OpenStack.

One of the OpenStack projects that seem to get less attention than others such as Nova and Neutron is the Cinder block storage service project. I though it may be helpful to others if I wrote up some things I’ve learned about Cinder. I’ll start, in this post, by walking through the basics of Cinder; then following on my recent series on VMware vSphere with OpenStack Nova Compute, I wrote a post on how vSphere uniquely integrates with this block storage service. First, let’s put Cinder in proper context by taking a look at the available storage options in OpenStack.

Storage Options in OpenStack

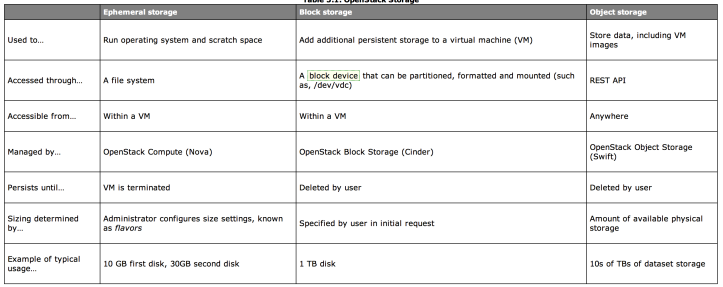

There are currently three storage options available with OpenStack – ephemeral, block (Cinder), and object (Swift) storage. The table below from the OpenStack Operations Guide helpfully outlines the differences between the three options: Cloud instances are typically created with at least one ephemeral disk which is used to run the VM guest operating system and boot partition; an ephemeral disk is purged and deleted when an instance is terminated. Swift was included as one of original OpenStack projects to provide durable, scale-out object storage. Initially, block services was created as a component of Nova Compute, called Nova Volumes; that has since been broken out as its own project called Cinder.

Cloud instances are typically created with at least one ephemeral disk which is used to run the VM guest operating system and boot partition; an ephemeral disk is purged and deleted when an instance is terminated. Swift was included as one of original OpenStack projects to provide durable, scale-out object storage. Initially, block services was created as a component of Nova Compute, called Nova Volumes; that has since been broken out as its own project called Cinder.

So what are the characteristics of Cinder and what are some its benefits? Cinder provides instances with block storage volumes that persist even when the instances they are attached to are terminated. A single block volume can only be attached to a single instance at any one time but provides the flexibility to be detached from one instance and attached to another instance. The best analogy to this is of a USB drive which can be attached to one machine and moved to a second machine with any data on that drive staying intact across multiple machines. An instance can attach multiple block volumes.

In a Cloud platform such as OpenStack, persistent block storage has several potential use cases:

In a Cloud platform such as OpenStack, persistent block storage has several potential use cases:

- If for some reason you need to terminate and relaunch an instance, you can keep any “non-disposable” data on Cinder volumes and re-attached them to the new instance.

- If an instance misbehaves or “crashes” unexpectedly, you can launch a new instance and attach Cinder volumes to that new instance with data intact.

- If a compute node crashes, you can launch new instances on surviving compute nodes and attach Cinder volumes to those new instances with data intact.

- Using a dedicated storage node or storage subsystem to host Cinder volumes, capacity can be provided that is greater than what is available via the direct-attached storage in the compute nodes (Note that is also true if using NFS with shared storage but without data persistence).

- A Cinder volume can be used as the boot disk for a Cloud instance; in that scenario, an ephemeral disk is not required.

The Cinder Architecture

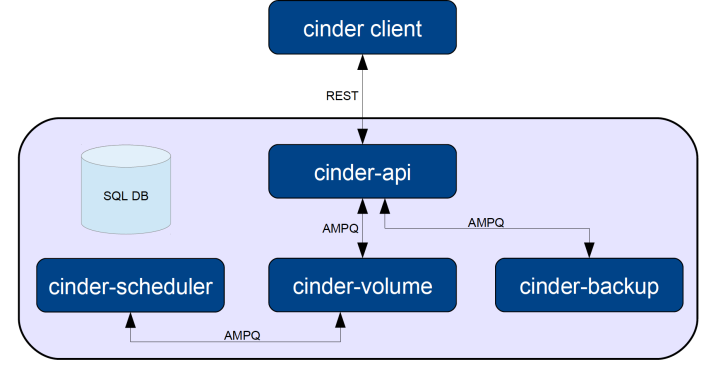

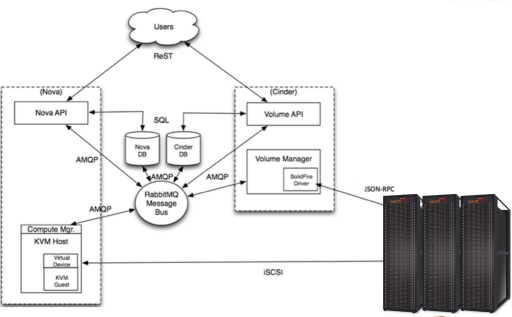

As seen in the diagram above from Avishay traeger of IBM, the Cinder block service is delivered using the following daemons:

As seen in the diagram above from Avishay traeger of IBM, the Cinder block service is delivered using the following daemons:

- cinder-api – A WSGI app that authenticates and routes requests throughout the Block Storage service. It supports the OpenStack APIs only, although there is a translation that can be done through Nova’s EC2 interface, which calls in to the cinder client.

- cinder-scheduler – Schedules and routes requests to the appropriate volume service. Depending upon your configuration, this may be simple round-robin scheduling to the running volume services, or it can be more sophisticated through the use of the Filter Scheduler.

- cinder-volume – Manages Block Storage devices, specifically the back-end devices themselves.

- cinder-backup – Provides a means to back up a Cinder Volume to various backup targets.

Cinder Workflow Using Commodity Storage

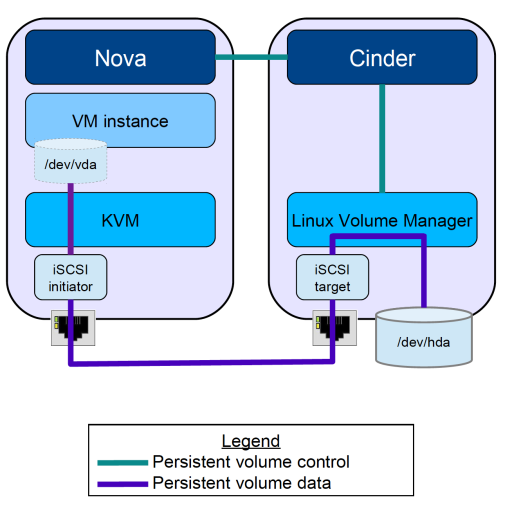

As originally conceived, all cinder daemons can be hosted on a single Cinder node or alternatively, all cinder daemons, expect cinder-volume can be hosted on the Cloud Controller nodes with the cinder volume daemon installed on one or more cinder-volume nodes that would function as virtual storage arrays (VSA). These VSAs are typically Linux servers with commodity storage; volumes are created and presented to compute nodes using Logical Volume Manager (LVM).

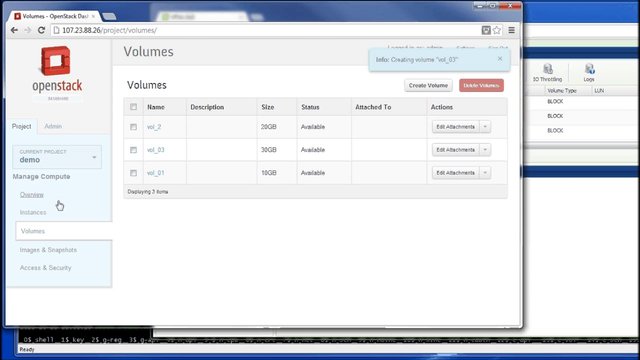

The following high-level procedure, outlined in the OpenStack Cloud Administrator Guide and visualized in a diagram from Avishay traeger of IBM, demonstrates how a Cinder volume is attached to a Cloud instance when invoked via the command-line, the Horizon dashboard, or the OpenStack API:

- A volume is created through the cinder create command. This command creates an LV into the volume group (VG) “cinder-volumes.”

- The volume is attached to an instance through the nova volume-attach command. This command creates a unique iSCSI IQN that is exposed to the compute node.

- The compute node, which runs the instance, now has an active iSCSI session and new local storage (usually a /dev/sdX disk).

- Libvirt uses that local storage as storage for the instance. The instance get a new disk, usually a /dev/vdX disk.

Note that beginning in the Grizzly release, Cinder supports Fibre Channel, but for KVM only.

Note that beginning in the Grizzly release, Cinder supports Fibre Channel, but for KVM only.

Cinder Workflow Using Enterprise Storage Solutions

Cinder also supports drivers that allow Cinder volumes to be created and presented using storage solutions from vendors such as EMC, NetApp, Ceph, GlusterFS, etc.. These solutions can be used in place of Linux servers with commodity storage leveraging LVM.

Each vendor’s implementation is slightly different so I would advise readers to look at the OpenStack Configuration Reference Guide to understand how a specific storage vendor is implementing Cinder with their solution. As an example, James Ruddy, from the Office of the CTO at EMC, has a recent blog post walking through installing Cinder with ViPR. Note that the architecture and workflow is similar across all vendors except that the cinder-volume node communicates with the storage solution to manage volumes but does not function as a VSA to create and present Cinder volumes using storage attached to the cinder-volume node.

Each vendor’s implementation is slightly different so I would advise readers to look at the OpenStack Configuration Reference Guide to understand how a specific storage vendor is implementing Cinder with their solution. As an example, James Ruddy, from the Office of the CTO at EMC, has a recent blog post walking through installing Cinder with ViPR. Note that the architecture and workflow is similar across all vendors except that the cinder-volume node communicates with the storage solution to manage volumes but does not function as a VSA to create and present Cinder volumes using storage attached to the cinder-volume node.

I am intentionally keeping to the basics in this post, leaving out such Cinder features such as snapshots and boot from volume. For more information on other facets of Cinder, I would encourage readers to read through the OpenStack Configuration Reference Guide and the OpenStack Cloud Administrator Guide. There, you can also get walk-throughs on how to set up Cinder using commodity hardware and enterprise storage solutions. In part 2, I detail what VMware has done in Havana to integrate Cinder with vSphere.

Related articles

- Laying Cinder Block (Volumes) In OpenStack, Part 1: The Basics (cloudarchitectmusings.com)

[…] – If you recall from my previous post, the default behavior with non-vSphere instances is for the cinder create command to instantiate a […]

[…] Kenneth Hui: Laying Cinder Block (Volumes) In OpenStack, Part 1: The Basics and Laying Cinder Block (Volumes) In OpenStack, Part 2: Integration With […]

Hello.

1. I saw that you used a couple of my slides here. I have a new version of that slide deck available: http://www.slideshare.net/avishaytraeger/cinder-havana

2. “All Cloud instances are created with at least one ephemeral disk” – not necessarily – boot from volume is available as well.

3. Cinder-backup is not only for backing up to object storage – IBM TSM and Ceph are supported as well in Havana

4. Fibre Channel support has been available since Grizzly

5. “Cinder Workflow Using Enterprise Storage Solutions” – IBM had drivers available since Essex, and many other vendors had drivers before Grizzly too.

Avishay,

Thank you for commenting. I’ve make the suggested changes and linked to your presentation; appreciate the corrections.

Ken

Nice article, maybe you could hep me. I’ve have tested Openstack only in a single LAB and everything is OK. Now it’s time to put it on Production and we will buy an Storage, so a lot of doubts is emerging. First of all, /var/lib/nova/instances. Should it be mounted to a BLOCK STORAGE (HP 3PAR), is it possible and the best strategy ? How storage will be presented to Linux, as a /dev/hdX ? Thank you.

[…] block storage service. If you have not already done so, I encourage you to at least read my part 1 post on the basics of Cinder; you may also want to read part 2 if you are interested in how VMware […]

Clarification request: When an Instance receives the information as to it’s Block Storage (iSCSI iqn) that is referring to the Cinder location and not that of the storage device. I.e., Instances put and get info from/to storage THROUGH Cinder node, not directly to the storage node (which is why the storage nodes don’t all need a copy of the storage vendor drivers)….correct? (Debate between my colleague and I)

Hi, thanks for your good article.

Just FYI, it seems there was a small error in this sentence:

“alternatively, all cinder daemons, expect cinder-volume can be hosted on the Cloud Controller ”

It should be cinder-api, instead of cinder-volume.

Sorry, it seems expect –> except.