[I’ve updated this series with information based on the new Havana release and changes in vSphere integration with the latest version of OpenStack. Any changes are prefaced with the word “Havana” in bold brackets]

[I’ve updated this series with information based on the new Havana release and changes in vSphere integration with the latest version of OpenStack. Any changes are prefaced with the word “Havana” in bold brackets]

Having walked through several aspects of vSphere with OpenStack,, I want to start putting some of the pieces together to demonstrate how an architect might begin to design a multi-hypervisor OpenStack Cloud. Our use case will be for a deployment with KVM and vSphere; note that for the sake of simplicity, I am deferring discussion about networking and storage for future blog posts. I encourage readers to review my previous posts on Architecture, Resource Scheduling, VM Migration, and Resource Overcommitment.

Cloud Segregation

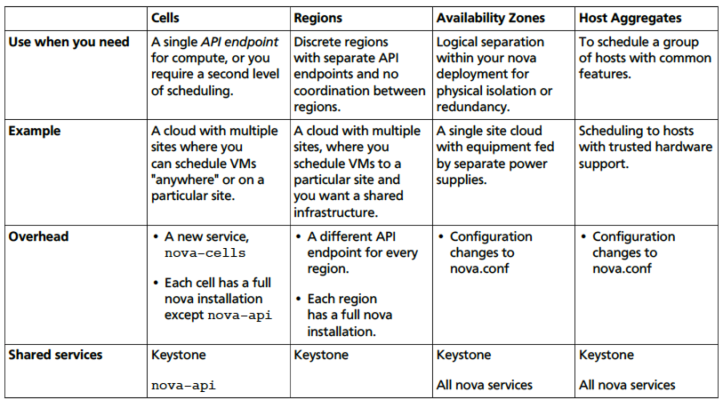

Before delving into our sample use case and OpenStack Cloud design, I want to review the different ways we can segregate resources in OpenStack; one of these methods, in particular, will be relevant in our multi-hypervisor Cloud architecture.

The table above and description of each method can be found in the OpenStack Operations Guide. Note that for the purposes of this post, we’ll be leveraging Host Aggregates.

User Requirements

ACME Corporation has elected to deploy an OpenStack Cloud. As part of that deployment, ACME’s IT group has decided to purse a dual-hypervisor strategy using ESXi and KVM; the plan is to create a tiered offering where applications would be deployed on the most appropriate hypervisor, with a bias towards using KVM whenever possible since it is free. Their list of requirements for this new Cloud includes the following:

- Virtualize 6 Oracle servers that are currently running on bare-metal Linux. Each server has dual quad-core processors with 256 GB of RAM and is heavily used. ACME needs these Oracle servers to all be highly available but have elected to not use Oracle Real Application Clusters (RAC).

- Virtualize 16 application servers that are currently running on bare-metal Linux. Each server has a single quad-core processor with 64 GB of RAM, although performance data indicates the server CPUs are typically only 20% utilized and could run on just 1 core. The application is home-grown and was written to be stateful and is sensitive to any type of outage or service disruption.

- Virtualize 32 Apache servers that are currently running on bare-meal Linux. Each server has a single quad-core processor with 8 GB of RAM. It has been determined that each server can be easily consolidated based on moderate utilization. The application is stateless and new VMs can be spun up and added to the web farm as needed.

- Integrate 90 miscellaneous Virtual Machines running a mix of Linux and Windows. These VMs use an average of 1 vCPU and 4 GB of RAM.

- Maintain 20% free resource capacity across all hypervisor hosts for bursting and peaks.

- Utilize a N+1 design in case of a hypervisor host failure to maintain acceptable performance levels.

Cloud Controller

The place to start is with the Cloud Controller. A Cloud Controller is a collection of services that work together to manage the various OpenStack projects, such as Nova, Neutron, Cinder, etc.. All services can reside on the same physical or virtual host or, since OpenStack utilizes a shared-nothing message-based architecture, services can be distributed across multiple hosts to provide scalability and HA. In the case of Nova, all services except nova-compute can run on the Controller while the nova-compute service runs on the Compute nodes.

For production, I would always recommend running two Cloud Controllers for redundancy and using a number of technologies such as Pacemaker, HAProxy, MySQL with Galera, etc.. A full discussion of Controller HA is beyond the scope of this post. You can find more information in the OpenStack High Availability Guide and I also address it in my slide deck on HA in OpenStack. For scalability, a large Cloud implementation can leverage the modular nature of OpenStack to distribute various services across multiple Controller nodes.

For production, I would always recommend running two Cloud Controllers for redundancy and using a number of technologies such as Pacemaker, HAProxy, MySQL with Galera, etc.. A full discussion of Controller HA is beyond the scope of this post. You can find more information in the OpenStack High Availability Guide and I also address it in my slide deck on HA in OpenStack. For scalability, a large Cloud implementation can leverage the modular nature of OpenStack to distribute various services across multiple Controller nodes.

Compute Nodes

The first step in designing the Compute Node layer is to take a look at the customer requirements and map them to current capabilities within Nova Compute, beginning with the choice of which hypervisors to use for which workloads.

KVM

As discussed in previous posts. KVM with libvirt is best suited for workloads that are stateless in nature and does not require some of the more advanced HA capabilities found in hypervisors such as Hyper-V or ESXi with vCenter. For this customer use case, it would be appropriate to virtualize the Apache servers and the miscellaneous bare-metal servers, using KVM.

From a sizing perspective we will use a 4:1 vCPU to pCPU overcommitment ratio for the Apache servers, which is slightly more aggressive than the 3:1 vCPU to pCPU overcommitment I advocated in my previous Resource Overcommitment post; however, I am comfortable with being a bit more aggressive since these are web servers are moderately used in terms of resource utilization. For the miscellaneous servers, we will use the standard 3:1 overcommitment ratio. From a RAM perspective we will maintain a 1:1 vRAM to pRAM overcommitment ratio across both types of servers. The formula looks something like this:

((32 Apache * 4 pCPUs) / 4) + ((90 Misc VMs * 1 vCPU) / 3) = 62 pCPUs

((32 Apache * 8 pRAM) / 1) + ((90 Misc VMs * 4 vRAM) / 1) = 616 GB pRAM

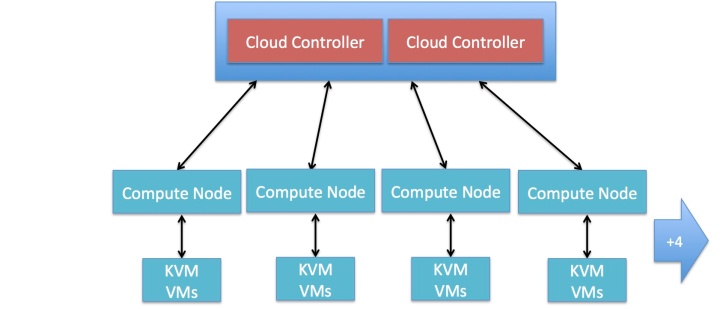

Using a standard dual hex-core server with 128 GB RAM for our Compute Node hardware standard, that works out to a minimum of 6 Compute Nodes, based on CPU, and 5 Compute Nodes, based on RAM. Please note the following:

- The quantity of servers would change depending on the server specifications.

- It is recommended that all the Compute Nodes for a common hypervisor have the same hardware specifications in order to maintain compatibility and predictable performance in the event of a Node failure (The exception is for Compute Nodes managing vSphere, which we will discuss later).

- Use the greater quantity of Compute Nodes determined by either the CPU or RAM requirements. In this use case, we want to go with 6 Compute Nodes.

Starting with a base of 6 nodes, we then factor in the additional requirements for free resource capacity and N+1 design. To allow for the 20% free resource requirements, the formula looks something like this:

62 pCPU * 125% = 78 pCPU

616 GB pRAM * 125% = 770 GB pRAM

That works out to an additional Compute Node for a total of 7 overall. To factor in the N+1 design, we add an other node for a total of 8 Compute Nodes.

Given the user case requirements for a highly available infrastructure underneath Oracle, this becomes a great use case for vSphere HA. For the purpose of this exercise, we will host Oracle and application servers on VMs running on ESXi hypervisors. For vSphere, the design is a bit more complicated since we have to design at 3 layers – the ESXi layer, the vCenter layer, and the Compute Node layer.

ESXi Layer

Starting at the ESXi hypervisor layer we can calculate the following, starting with the Oracle servers:

((6 Oracle * 8 pCPUs) / 1) = 48 pCPUs

((6 Oracle * 256 GB pRAM) / 1) = 1536 GB pRAM

Note that because Oracle falls into the category of a Business Critical Application (BCA), we will follow the recommended practice of no overcommitment on CPUs or RAM, as outlined in my blog post on Virtualizing BCAs. For Oracle licensing purposes, I would recommend, if possible, the largest ESXi host you can feasibly use; you can find details on why in my blog post on virtualizing Oracle. If we use ESXi servers with dual octa-core processors and 512 GB RAM, we could start with 3 ESXi servers in a cluster. To allow for the 20% free resource requirements, the formula looks something like this:

48 pCPU * 125% = 60 pCPU

1536 GB pRAM * 125% = 1920 GB pRAM

That works out to an additional ESXi host for a total of 4 overall. To factor in the N+1 design, we add an other node for a total of 5 hosts in a cluster. For performance and Oracle licensing reasons, you should place these host into a dedicated cluster and create one or more additional clusters for non-Oracle workloads.

Due to the sensitive nature of the application servers and their statefulness, they are also good candidates for being hosted on vSphere. We can calculate the number of ESXi hosts required by using the following:

((16 App * 4 pCPUs) / 4) = 16 pCPUs

((16 App * 64 GB pRAM) / 1.25) = 819 GB pRAM

Using dual quad-core servers with 256 GB RAM for our ESXi servers, that works out to a minimum of 2 ESXi hosts, based on CPU, and 4 ESXi hosts, based on RAM. Again, it is always recommended to go with the more conservative design and start with 4 ESXi hosts. To allow for the 20% free resource requirements, the formula looks something like this:

16 pCPU * 125% = 20 pCPU

819 GB pRAM * 125% = 1024 GB pRAM

That works out to an additional ESXi host for a total of 5 overall. To factor in the N+1 design, we add an other node for a total of 6 hosts in a cluster. Typically, you would place all 6 hosts into a single vSphere cluster.

vCenter Layer

I want to briefly discuss the issue of vCenter redundancy. In discussions with the VMware team, they have recommended creating a separate management vSphere cluster, outside of OpenStack, which would host the vCenter servers being manged by the OpenStack Compute nodes. This would enable HA and DRS of the vCenter servers and maintain separation of the management plane from the objects under management. However, for simplicity’s sake, i will assume here that we do not have vCenter redundancy and that the vCenter server will not be running virtualized.

Compute Node Layer

[Havana] In the Grizzly release, a compute node could only manage a single vSphere cluster. With the ability, in Havana, to use one Compute Node to manage multiple ESXi clusters, we have the option of deploying just one compute node or choosing to deploy 2 Compute Nodes for our use case. Note that there is currently no way to migrate a vSphere cluster from one Compute Node to another. So in the event of a Node failure, vSphere will continue running and vCenter could still be used for management; however, there is no way to migrate the vSphere cluster to another Compute Node. Once the managing Compute Node is restored, connection between it and vCenter can be re-established.

IN terms of redundancy, there is some question as to a recommended practice. The Nova Compute guide recommends virtualizing the Compute Node and hosting it on the same cluster it is managing; in talks with VMware’s OpenStack team, they recommend placing the Compute Node in a separate management vSphere cluster so it can take advantage of vSphere HA and DRS. The rationale again would be to design around the fact that a vSphere cluster cannot be migrated to another Compute Node. For simplicity’s sake, i will assume that the Compute Nodes will not be virtualized.

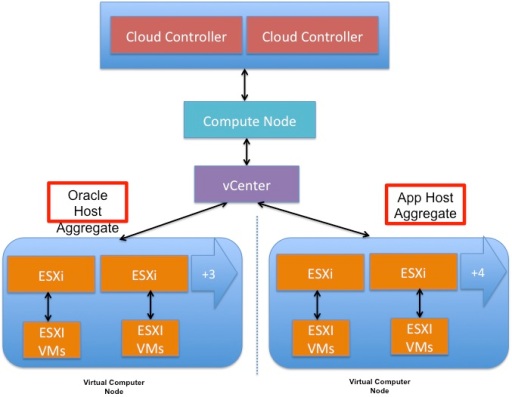

[Havana] Putting the three layers together, we have a design that looks something like this if we choose to consolidate management of our 2 vSphere clusters on to 1 compute node; as mentioned earlier, we also have the option in Havana to use 2 compute nodes, 1 per cluster in order to separate failure zones at that layer.

The use of host aggregates above are to ensure that Oracle VMs will be spun up in the Oracle vSphere cluster and application servers in the app server vSphere cluster when deployed through OpenStack. More details about the use of host aggregates will be provided in the next section.

The use of host aggregates above are to ensure that Oracle VMs will be spun up in the Oracle vSphere cluster and application servers in the app server vSphere cluster when deployed through OpenStack. More details about the use of host aggregates will be provided in the next section.

Putting It All Together

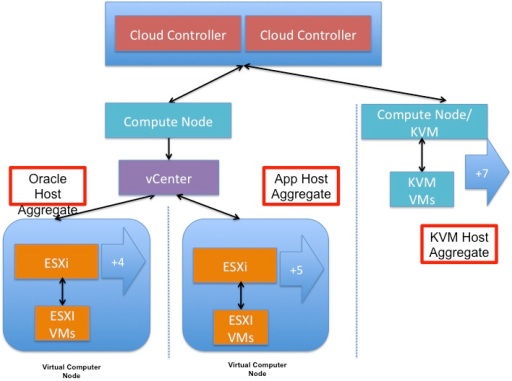

[Havana] A multi-hypervisor OpenStack cloud, with KVM and vSphere may look something like this, using 1 compute node hypervisor; as mentioned earlier, we also have the option in Havana to use 3 compute nodes, 1 per cluster in order to separate failure zones at that layer.

Note the use of Host Aggregates in order to partition Compute Nodes according to VM types. As discussed in my previous post on Nova-Scheduler, a vSphere cluster appears to the nova-scheduler as a single host. This can create unexpected decisions regarding VM placement. Also, it is important to be able to guarantee that Oracle VMs are spun up on the Oracle vSphere cluster, which utilizes more robust hardware. To design for this and to ensure correct VM placement, we can leverage a feature called Host Aggregates.

Note the use of Host Aggregates in order to partition Compute Nodes according to VM types. As discussed in my previous post on Nova-Scheduler, a vSphere cluster appears to the nova-scheduler as a single host. This can create unexpected decisions regarding VM placement. Also, it is important to be able to guarantee that Oracle VMs are spun up on the Oracle vSphere cluster, which utilizes more robust hardware. To design for this and to ensure correct VM placement, we can leverage a feature called Host Aggregates.

Host Aggregates are used with nova-scheduler to support automated scheduling of VM instances to be instantiated on a subset of Compute Nodes, based on server capabilities. For example to use Host Aggregates to ensure specific VMs are spun up on Compute Nodes managing KVM, you would do the following:

- Create a new Host Aggregate called, for example, KVM.

- Add the Compute Nodes managing KVM into the new KVM Host Aggregate.

- Create a new instance flavor called, for example, KVM.medium with the correct VM resource specifications.

- Map the new flavor to the correct Host Aggregate.

Now when users request an instance with the KVM.medium flavor, the nova-scheduler will only consider compute nodes in the KVM Host Aggregate. We would follow the same steps to create an Oracle Host Aggregate and Oracle flavor. Any new instance requests with the Oracle flavor would be fulfilled by the Compute Node managing the Oracle vSphere Cluster; the Compute Node would hand the new instance request to vCenter, which would then decides which ESXi host should be used.

[Havana] Of course there are other infrastructure requirements to consider, such as networking and storage. As mentioned earlier, I hope to address these concerns in future blogs posts over time. Also, you can freely access and download my “Cattle Wrangling For Pet Whisperers: Building A Multi-hypervisor OpenStack Cloud with KVM and vSphere” slide deck.

As always, all feedback are welcomed.

Related articles

- OpenStack Compute For vSphere Admins, Part 3: HA And VM Migration (cloudarchitectmusings.com)

- OpenStack Compute For vSphere Admins, Part 4: Resource Overcommitment in Nova Compute (cloudarchitectmusings.com)

- VMware + OpenStack : A Brief but Strained History (siliconangle.com)

- Late To The Game, Is VMware Turning Against OpenStack Or Embracing It? (techcrunch.com)

- Deploying A HA OpenStack Cloud On Your Laptop (cloudarchitectmusings.com)

[…] part 2 for DRS, part 3 for HA and VM Migration, part 4 for Resource Overcommitment and part 5 for Designing a Multi-Hypervisor […]

[…] part 3 for information on HA and VM Migration in OpenStack, part 4 on Resource Overcommitment, and part 5 on Designing a Multi-Hypervisor […]

[…] OpenStack in upcoming posts. Please also see part 4 for my post on Resource Overcommitment and part 5 for Designing a Multi-Hypervisor […]

[…] This is part 4 in an ongoing series on OpenStack for vSphere Admins. You can catch up on previous posts by following the links here for part 1, part 2, and part 3, and part 5. […]

[…] include designing for HA, redundancy, and scalability. Speakers: Kenneth Hui Related Blog Post: OpenStack Compute For vSphere Admins, Part 5: Designing A Multi-hypervisor Cloud URL to Vote: […]

Very nice post!

Question.. I am a bit struggling how would you configure Nova scheduler to support such environment. It seems that the capability we are currently developing (targeting Havana release) to support multiple scheduler policies (https://wiki.openstack.org/wiki/Nova/MultipleSchedulerPolicies) would be helpful — or even mandatory?

[…] By Kenneth Hui: OpenStack Compute For vSphere Admins, Part 5: Designing A Multi-hypervisor Cloud […]

[…] Kenneth Hui : 为Sphere管理员准备的OpenStack Compute 第5部分:设计多hypervisor的云 […]

[…] OpenStack Compute For vSphere Admins, Part 5: Designing A Multi-hypervisor Cloud […]

Very nice post!

From your experience is it possible to use both ESXi and KVM in a neutron scenario?

I am now in the situation of a perfectly working KVM havana infrastructure with OVS and neutron.

Is it possible to hook up an ESXi hypervisor, have you ever seen such a configuration, and do you have some references on how to do that?

My firs impression is that it could be done only if you use NSX as neutron plugin (http://docs.openstack.org/admin-guide-cloud/content/ch_networking.html#d6e3999), do you think the host aggregate approach would overcome this problem?

Thanks in advance,

Antonio

Antonio,

Thank you! Yes it is possible to use Neutron in an OpenStack environment with vSphere and KVM. You are correct that NSX is required since you cannot run ovs in ESXi. Host aggregates, Availability Zone, and other segregation methods will separate the hypervisors but I believe NSX will still be required for networking across the whole OpenStack platform. If that has changed, hopefully someone from VMWare will correct me.

Ken

What about the configuration described in this post? Was it realized with nova-network only?

Thanks,

Antonio

Antonio,

At the time of the post, I did not have access to NSX so I used Nova Networks since the nova compute is the same.

Kenneth, Antonio, currently vSphere works with both nova-network as well as Neutron with the NSX plugin. The nova-network support doesn’t currently support security groups, so it might only be applicable for initial testing or small environments where security groups may not be needed. For other environments, I’d recommend Neutron with NSX. We (VMware) are currently exploring other options for how we might support Neutron with vCloud Suite environments, but I can’t provide any firm details or timelines at this point.

I hope this helps!

Scott,

Thanx 4 the quick response. I think Antonio is asking if in a multi-hypervisor setup he would need to run NSX on the KVM as well as ESXi hosts? Or can he use ovs for the KVM compute nodes?

Ken

Kenneth, until ML2 support becomes much more widespread across the range of Neutron plugins, Antonio would have to choose a single Neutron plugin for his OpenStack implementation. So, he’d have to use NSX across the entire implementation. Keep in mind, however, that this means he’ll still be using OVS on his KVM hosts, but OVS would be managed by NSX.

Hi,

Very nice Post. As you mentioned in the comments I am using nova-network for realizing Esxi and KVM support together. But I am facing some issues. I am able to launch instances on Esxi and those are in running state but when we go to console it gives error “Operating system not found”.

Can you please help me fix this issue ?

Nice post, valuable stuff!!!