[I’ve updated this series with information based on the new Havana release and changes in vSphere integration with the latest version of OpenStack. Any changes are prefaced with the word “Havana” in bold brackets]

vSphere & Resource Scheduling in Nova Compute

In part 1, I gave an overview of the OpenStack Nova Compute project and how VMware vSphere integrates with that particular project. Before we get into actual design and implementation details, I want to review an important component of Nova Compute – resource scheduling, aka. nova-scheduler in OpenStack and Distributed Resource Scheduler (DRS) in vSphere. I want to show how DRS compares with nova-scheduler and the impact of running both as part of an OpenStack implementation. Note that I can’t be completely exhaustive in this blog post but would commend everyone to read the following:

- For DRS, the “bible” is “VMware vSphere 5 Clustering Technical Deepdive,” by Frank Denneman and Duncan Epping.

- For nova-scheduler, the most comprehensive treatment I’ve read is Yong Sheng Bong’s excellent blog post on “OpenStack nova-scheduler and its algorithm.” It’s a must read if you want to dive deep into the internals of the nova-scheduler.

OpenStack nova-scheduler

Nova Compute uses the nova-scheduler service to determine which compute node should be used when a VM instance is to be launched. The nova-scheduler, like vSphere DRS, makes this automatic initial placement decision using a number of metric and policy considerations, such as available compute resources and VM affinity rules. Note that unlike DRS, the nova-scheduler does not do periodic load-balancing or power-management of VMs after the initial placement. The scheduler has a number of configurable options that can be accessed and modified in the nova.conf file, the primary file used to configure nova-compute services in an OpenStack Cloud.

scheduler_driver=nova.scheduler.multi.MultiScheduler compute_scheduler_driver=nova.scheduler.filter_scheduler.FilterScheduler scheduler_available_filters=nova.scheduler.filters.all_filters --> scheduler_default_filters=AvailabilityZoneFilter,RamFilter,ComputeFilter --> least_cost_functions=nova.scheduler.least_cost.compute_fill_first_cost_fn compute_fill_first_cost_fn_weight=-1.0

The 2 variables I want to focus on are “schedule_default_filters” and “Least_cost_functions.” They represent two algorithmic processes used by the Filter Scheduler to determine initial placement of VMs (There are two other schedulers, the Chance Scheduler and the Multi Scheduler, that can used in place of the Filter Scheduler; however, the Filter Scheduler is the default and should be used in most cases). These two process work together to balance workload across all existing compute nodes at VM launch much in the same way the Dynamic Entitlements and Resource Allocation Settings are used by DRS to balance VMs across an ESXi cluster.

Filtering (schedule_default_filters)

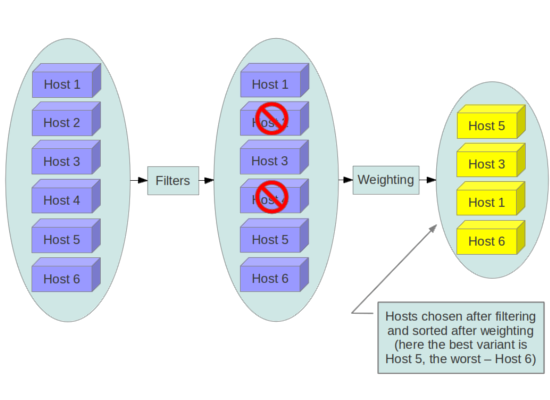

When a request is made to launch a new VM, the nova-compute service contacts the nova-scheduler to request placement of the new instance. The nova-scheduler uses the scheduler, by default the Filter Scheduler, named in the nova.conf file to determine that placement. First, a filtering process is used to determine which hosts are eligible for consideration and an eligible host list is created and then a second algorithm, Costs and Weights (described later), is applied against the list to determine which compute node is optimal for fulfilling the request.

The Filtering process uses the scheduler_available_filters configuration option in nova.conf to determine what filters will be used for filtering out ineligible compute nodes and to create the eligible hosts list. By default, there are three filters that are used:

- The AvailabilityZoneFilter filters out hosts that do not belong to the Availability Zone specified when a new VM launch request is made via the Horizon dashboard or from the nova CLI client.

- The RamFilter ensures that only nodes with sufficient RAM make it on to the eligible host list. If the RamFilter is not used, the nova-scheduler may over-provision a node with insufficient RAM resources. By default, the filter is set to allow overcommitment on RAM by 50%, i.e. the scheduler will allow a VM requiring 1.5 GB of RAM to be launched on a node with only 1 GB of available RAM. This setting is configurable by changing the “ram_allocation_ratio=1.5” setting in nova.conf.

- The ComputeFilter filters out any nodes that do not have sufficient capabilities to launch the requested VM as it corresponds to the requested instance type. It also filters out any nodes that cannot support properties defined by the image that is being used to launch the VM. These image properties include architecture, hypervisor, and VM mode.

An example of how the Filtering process may work is that host 2 and host 4 above may have been initially filtered out for any combination of the following reasons, assuming the default filters were applied:

An example of how the Filtering process may work is that host 2 and host 4 above may have been initially filtered out for any combination of the following reasons, assuming the default filters were applied:

- The requested VM is to be in Availability Zone 1 while nodes 2 and 4 are in Availability Zone 2.

- The requested VM requires 4 GB of RAM and nodes 2 and 4 each have only 2 GB of available RAM.

- The requested VM has to run on vSphere and nodes 2 and 4 support KVM.

There are a number of other filters that can be used along with or in place of the default filters; some of them include:

- The CoreFilter ensures that only nodes with sufficient CPU cores make it on to the eligible host list. If the CoreFilter is not used, the nova-scheduler may over-provision a node with insufficient physical cores. By default, the filter is set to allow overcommitment based on a ratio of 16 virtual cores to 1 physical core. This setting is configurable by changing the “cpu_allocation_ratio=16.0” setting in nova.conf.

- The DifferentHostFilter ensures that the VM instance is launched on a different compute node from a given set of instances, as defined in a scheduler hint list. This filter is analogous to the anti-affinty rules in vSphere DRS.

- The SameHostFilter ensures that the VM instance is launched on the same compute node as a given set of instances, as defined in a scheduler hint list. This filter is analogous to the affinity rules in vSphere DRS.

The full list of filters are available in the Filters section of the “OpenStack Compute Administration Guide.” The Nova-Scheduler is flexible enough that customer filters can be created and multiple filters can be applied simultaneously.

Costs and Weights

Next, the Filter Scheduler takes the hosts that remain after the filters have been applied and applies one or more cost function to each host to get numerical scores for each host. Each cost score is multiplied by a weighting constant specified in the nova.conf config file. Details on the algorithm used are detailed in the “OpenStack nova-scheduler and its algorithm” I referenced previously. The weighting constant configuration option is the name of the cost function, with the _weight string appended. Here is an example of specifying a cost function and its corresponding weight:

least_cost_functions=nova.scheduler.least_cost.compute_fill_first_cost_fn,nova.scheduler.least_cost.noop_cost_fn compute_fill_first_cost_fn_weight=-1.0 noop_cost_fn_weight=1.0

There are three cost functions available; any of the functions can used alone or in any combination with the other functions.

- The nova.scheduler.least_cost.compute_fill_first_cost_fn function calculates the amount of available RAM on the compute nodes and chooses which node is best suited for fulfilling a VM launch request based on the weight assigned to the function. A weight of 1.0 will configure the Scheduler to “fill-up” a node until there is insufficient RAM available. A weight of -1.0 will configure the scheduler to favor the node with the most available RAM for each VM launch request.

- The nova.scheduler.least_cost.retry_host_cost_fn function adds additional cost for retrying a node that was already used for a previous attempt. The intent of this function is ensure that nodes which consistently encounter failures are used less frequently.

- The nova.scheduler.least_cost.noop_cost_fn function will cause the scheduler not to discriminate between any nodes. In practice this function is never used.

The Cost and Weight function is analogous to the Share concept in vSphere DRS.

In the example above, if we choose to only use the nova.scheduler.least_cost.compute_fill_first_cost_fn function and set the weight to compute_fill_first_cost_fn_weight=1.0, we would expect the following results:

In the example above, if we choose to only use the nova.scheduler.least_cost.compute_fill_first_cost_fn function and set the weight to compute_fill_first_cost_fn_weight=1.0, we would expect the following results:

- All nodes would be ranked according to amount of available RAM, starting with host 4.

- The nova-scheduler would favor launching VMs on host 4 until there are insufficient RAM available to launch a new instance.

DRS with the Filter Scheduler

Now that we’ve reviewed how Nova compute schedules resources and how it works compared with vSphere DRS, let’s look at how DRS and the nova-scheduler work together when integrating vSphere into OpenStack. Note that I will not be discussing Nova with the ESXDriver and standalone ESXi hosts; in that configuration, the filtering works the same as it does with other hypervisors. I will, instead, focus on Nova with the VCDriver and ESXi clusters managed by vCenter.

[Havana] As mentioned in my previous post, since vCenter abstracts the ESXi hosts from the nova-compute service, the nova-scheduler views each cluster as a single compute node/hypervisor host with resources amounting to the aggregate resources of all ESXi hosts in a given cluster. This has two effects:

[Havana] As mentioned in my previous post, since vCenter abstracts the ESXi hosts from the nova-compute service, the nova-scheduler views each cluster as a single compute node/hypervisor host with resources amounting to the aggregate resources of all ESXi hosts in a given cluster. This has two effects:

- While the VCDriver interacts with the nova-scheduler to determine which cluster will host a new VM the nova-scheduler plays NO part in where VMs are hosted in the chosen cluster. When the appropriate vSphere cluster is chosen by the scheduler, nova-compute simply makes an API call to vCenter and hands-off the request to launch a VM. vCenter then selects which ESXi host in that cluster will host the launched VM, based on Dynamic Entitlements and Resource Allocation settings (DRS should be enabled with “Fully automated” placement turned on). Any automatic load balancing or power-management by DRS is allowed but will not be known by Nova compute.

- In the example above, the KVM compute node has 8 GB of available RAM while the nova-scheduler sees the “vSphere compute node” as having 12 GB RAM. Again, the nova-scheduler does not take into account the RAM in the vCenter Server or the actual available RAM in each individual ESXi host; it only considers the aggregate RAM available in all ESXi hosts in a given cluster.

As I also mentioned in my previous post, the latter effect can cause issues with how VMs are scheduled/distributed across a multi-compute node OpenStack environment. Let’s take a look at two use cases where the nova-scheduler may be impeded in how it attempts to schedule resources; we’ll focus on RAM resources, using the environment shown above, and assume we are allowing the default of 50% overcommitment on physical RAM resources.

- A user requests a VM with 10 GB of RAM. The nova-scheduler applies the Filtering algorithm and adds the vSphere compute node to the eligible host list even though neither ESXi hosts in the cluster has sufficient RAM resources, as defined by the RamFilter. If the vSphere compute node is chosen to launch the new instance, vCenter will then make the determination that there aren’t enough resources and will respond to the request based on DRS defined rules.

- A user requests a VM with 4 GB of RAM. The nova-scheduler applies the Filtering Algorithm and correctly adds all three compute nodes to the eligible host list. The scheduler then applies the Cost and Weights algorithm and favors the vSphere compute node which it believes has more available RAM. This creates an imbalanced system where the hypervisor/compute node with the lower amount of available RAM may be incorrectly assigned the lower cost and favored for launching new VMs.

Another use case that is impacted by the fact that nova-scheduler does not insight in to individual ESXi servers has to do with the placement of legacy workloads in a multi-hypervisor Cloud. For example, due to the stateful nature and the lack of application resiliency in most legacy applications, they often are not suitable for running on a VM that is hosted in a hypervisor such as KVM with libvirt, which does not have vSphere style HA capabilities; I talk about why that is the case in part 3 of this series.

Another use case that is impacted by the fact that nova-scheduler does not insight in to individual ESXi servers has to do with the placement of legacy workloads in a multi-hypervisor Cloud. For example, due to the stateful nature and the lack of application resiliency in most legacy applications, they often are not suitable for running on a VM that is hosted in a hypervisor such as KVM with libvirt, which does not have vSphere style HA capabilities; I talk about why that is the case in part 3 of this series.

In the use case above, a user requests a new instance to be spun up that will used to house an Oracle application. Nova-scheduler chooses to instantiate this new VM on a KVM compute node/hypervisor host since it has the most available resources. As stated above, however a vSphere cluster is probably a more appropriate solution.

In the use case above, a user requests a new instance to be spun up that will used to house an Oracle application. Nova-scheduler chooses to instantiate this new VM on a KVM compute node/hypervisor host since it has the most available resources. As stated above, however a vSphere cluster is probably a more appropriate solution.

So how do we design around this architecture? Unfortunately, the VCDriver is new to OpenStack and there does not seem to be a great deal of documentation or recommended practices available. I am hoping this changes shortly and I plan to help as best as I can. I’ve been speaking with the VMware team working on OpenStack integration and I am hoping we’ll be able to collaborate together on putting out documentation and recommended practices. As well, VMware is continuing to improve on the code they’ve already contributed and I expect some of these issues will be addressed in future OpenStack releases; I also expect that additional functionality will be added to Nova compute as OpenStack continues to mature.

After walking though some more architectural details behind vSphere integration with OpenStack, I will begin posting, in the near future, how to design, deploy, and configure vSphere with OpenStack Nova compute. Please also see part 3 for information on HA and VM Migration in OpenStack, part 4 on Resource Overcommitment, and part 5 on Designing a Multi-Hypervisor Cloud.

Related articles

- OpenStack For VMware Admins: Nova Compute With vSphere, Part 1 (cloudarchitectmusings.com)

- OpenStack is not vSphere… It’s Automation. (allyourcloud.wordpress.com)

[…] This is part 1 of a multi-part post; part 2 can be found here. […]

It’s probably worth mentioning that nova-scheduler is quite modular, and that if the filters don’t meet your specific needs it’s easy to create a custom one and configure Nova to use it as well.

Andy,

Great point! I added the suggested language to the post.

Thank you.

Ken

[…] William Lam публикует статью про интеграцию VMware vSphere и OpenStack. Там же приводится ссылка на две части статьи про OpenStack&vSphere (раз и два). […]

[…] and is the default hypervisor used when installing OpenStack. Continuing on from part 1 and part 2 of this series, where I reviewed the architecture of vSphere with OpenStack Nova and DRS, I will […]

[…] for vSphere Admins. You can catch up on previous posts by following the links here for part 1, part 2, and part […]

[…] for vSphere Admins. You can catch up on previous posts by following the links here for part 1, part 2, part 3 and part […]

[…] vSphere & Resource Scheduling in Nova Compute In part 1, I gave an overview of the OpenStack Nova Compute project and how VMware vSphere integrates with that particular project. Before we get … […]

[…] and storage for future blog posts. I encourage readers to review my previous posts on Architecture, Resource Scheduling, VM Migration, and Resource […]

Awesome reference!… So, If I’m configuring only vSphere Cluster as compute node for OpenStack, that will mean nova-scheduler will only see the total amount of resources and will place all instances within the configured Cluster… Now to scale up, it will be a matter of adding either new Cluster inside vCenter and a dedicated VM as compute node, or easier adding more ESXi hosts to the current cluster….

Also, If you can point me to best practices to install and configure Openstack for production usage, I’ll really appreciated it.

Arturo,

Your understanding is correct. You can add more ESXi host to the the same cluster to add more compute resources. There will be new enhancements in the Havana release of OpenStack in October; so stay tuned.

In terms of documentation, go to the openstack.org website and look in the documentation page; in particular, look for the Operations Guide. Also, there is an OpenStack Cookbook v2 that will be coming out later this year.

Ken

[…] OpenStack Compute For vSphere Admins, Part 2: Nova-scheduler And DRS […]

[…] https://cloudarchitectmusings.com/2013/06/26/openstack-for-vmware-admins-nova-compute-with-vsphere-pa… […]

Hi Ken,

Thanks a lot for these series of articles on VMWare + OpenStack.

I wonder how much things changed over the course of Icehouse cycle. Why I’m not overly concerned with legacy workloads placement (it’s solvable with hypervisor type hint for scheduler) the issues like you described in “A user requests a VM with 10 GB of RAM” case seem to be quite unpleasant and not easily by-passable. Is it something you could comment on? Many thanks

You are welcome. There have been improvements in Icehouse but not in terms of vSphere integration with the Nova scheduler. Keep in mind though that seeing an entire vSphere cluster as a single compute node is an issue if you do not use availability zones, host aggregates, etc. and allow Nova to choose which hypervisor to use. If you use something like scheduler hints to isolate hypervisors, then a VM will be launched in the right environment.

BTW, VMware is working on improving the communication between vCenter and Nova so that the Nova scheduler will have more visibility into a vSphere cluster.

[…] The Nova Scheduler in Openstack is responsible for determining, which compute node a VM should be created on. If you are familiar with VMware this is like DRS, except it only happens on initial creation, there is no rebalancing that happens as resources are consumed overtime. Using the Openstack Dashboard GUI I am unable to tell nova to boot off a specific hypervisor, to do that I have to use the command line above (if someone knows of a way to do this using the GUI let me know, I have a feeling if it is not added already, they will add the ability to send a hint to nova from the GUI in a later version). In theory you can trust the nova-scheduler service to automatically balance the usage of compute resources (CPU, Memory, Disk etc) based on it’s default configuration. However, if you want to ensure that certain VM’s live on certain hypervisors you will want to use the command line above. For more information on how the scheduler works see : https://cloudarchitectmusings.com/2013/06/26/openstack-for-vmware-admins-nova-compute-with-vsphere-pa… […]

[…] DRS / DPM functionality. However, there are still unresolved problems with this approach, as this article on nova-scheduler and DRS highlights. (Funnily enough, I encountered exactly these same problems a few years ago when I was […]

[…] DRS / DPM functionality. However, there are still unresolved problems with this approach, as this article on nova-scheduler and DRS highlights. (Funnily enough, I encountered exactly these same problems a few years ago when I was […]

[…] I recommend reading the Scheduling section of the Configuration Reference guide and an earlier post on the subject from my personal blog. The rest of this blog post will assume understanding of Nova […]